Eric Kapilik is an Engineering Student at the University of Manitoba who has returned to JCA Electronics for a second 4-month summer term in the JCA Electronics Engineering Group. During his first summer term at JCA he developed a Tradeshow Demo for JCA’s Autonomous Framework. This summer he is doing research and development for technology

Eric Kapilik is an Engineering Student at the University of Manitoba who has returned to JCA Electronics for a second 4-month summer term in the JCA Electronics Engineering Group. During his first summer term at JCA he developed a Tradeshow Demo for JCA’s Autonomous Framework. This summer he is doing research and development for technology within the Autonomous Framework relating to obstacle detection and environmental perception.

Introduction

Over this summer I have had the opportunity to do research and development in the exciting field of autonomous perception. Specifically, I worked for the Autonomous development team. I researched, prototyped, developed, and tested perception sensors and algorithms for object detection.

Object detection and perception are relatively new technologies which are developing both in and outside of agriculture applications. Although it has previously been a technology primarily in academic research and cutting edge start-up companies, autonomous technologies including perception have been accelerating and changing the mainstream automotive industry for the past decade.

Autonomous navigation will be disruptive in the agricultural industry as specialized knowledge increases. Other industries have seen more progress in autonomous development. A lot of the development and technologies have and will transfer well into agricultural industries. Similar sensors can be used from automotive applications to agricultural applications. But many algorithms for autonomous operation are specialized towards urban settings where the environment is more structured, neat, and organized. Agricultural environments are less complex than urban settings with less obstacles and moving objects. But they will however have other challenges such as varying crop size, density, and growing pattern. Fields, orchards, and vineyards will vary largely with holes, dips, hills, and water contrasted to the predictably straight and lined pavement of a road. Also, urban environments do not have large amounts of dust or debris bombarding the sensors.

The JCA Electronics autonomous platform currently makes use of dual GPS / RTK GPS sensors to determine position, heading, and speed of the machine to perform autonomous driving. The GPS sensors used are incredibly accurate and have shown great results. However, the GPS does not provide a dynamic understanding of the immediate environment. GPS will not detect human workers in the field, nor ditches, trees, power line poles, nor even entire buildings. GPS also does not detect upcoming difficult terrain or slopes the vehicle must adjust for. Thus, a GPS system alone would not be sufficient for safe autonomous operation.

Perception systems require input data about the surrounding environment as well as algorithms to process that data into meaningful results. This was the focus of my summer, developing and testing object detection algorithms and evaluating different types of sensors for object detection.

Sensors

So, what sensors would an autonomous mobile machine in an agricultural field use? There are several main technologies that are commonly used for robotic perception and each of them have their own advantages and disadvantages. The most robust systems will have a suite of sensor technologies to cover the disadvantage of one sensor with the advantage of another. The four main sensors technologies for perception are cameras, LiDAR, radar, and thermal imaging.

Camera

Cameras provide high resolution images with color and texture information. And stereovision camera set ups can provide range information using corresponding pixels and triangulation. But cameras are also sensitive to changing lighting conditions or low visibility conditions (i.e., rain, fog, smoke, dust, etc.), and they have a relatively short range.

Stereo cameras are a very affordable technology that can produce a point cloud. They also provide color data and 2D images. This allows for areas of interest to be detected in 3D and then classified in the 2D image using popular techniques.

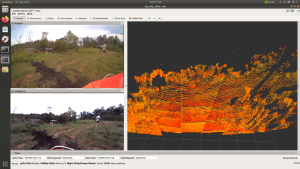

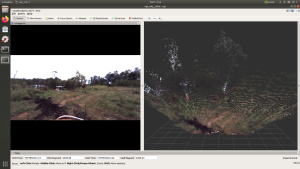

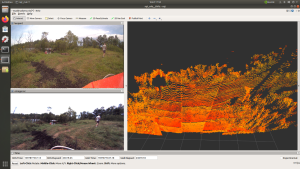

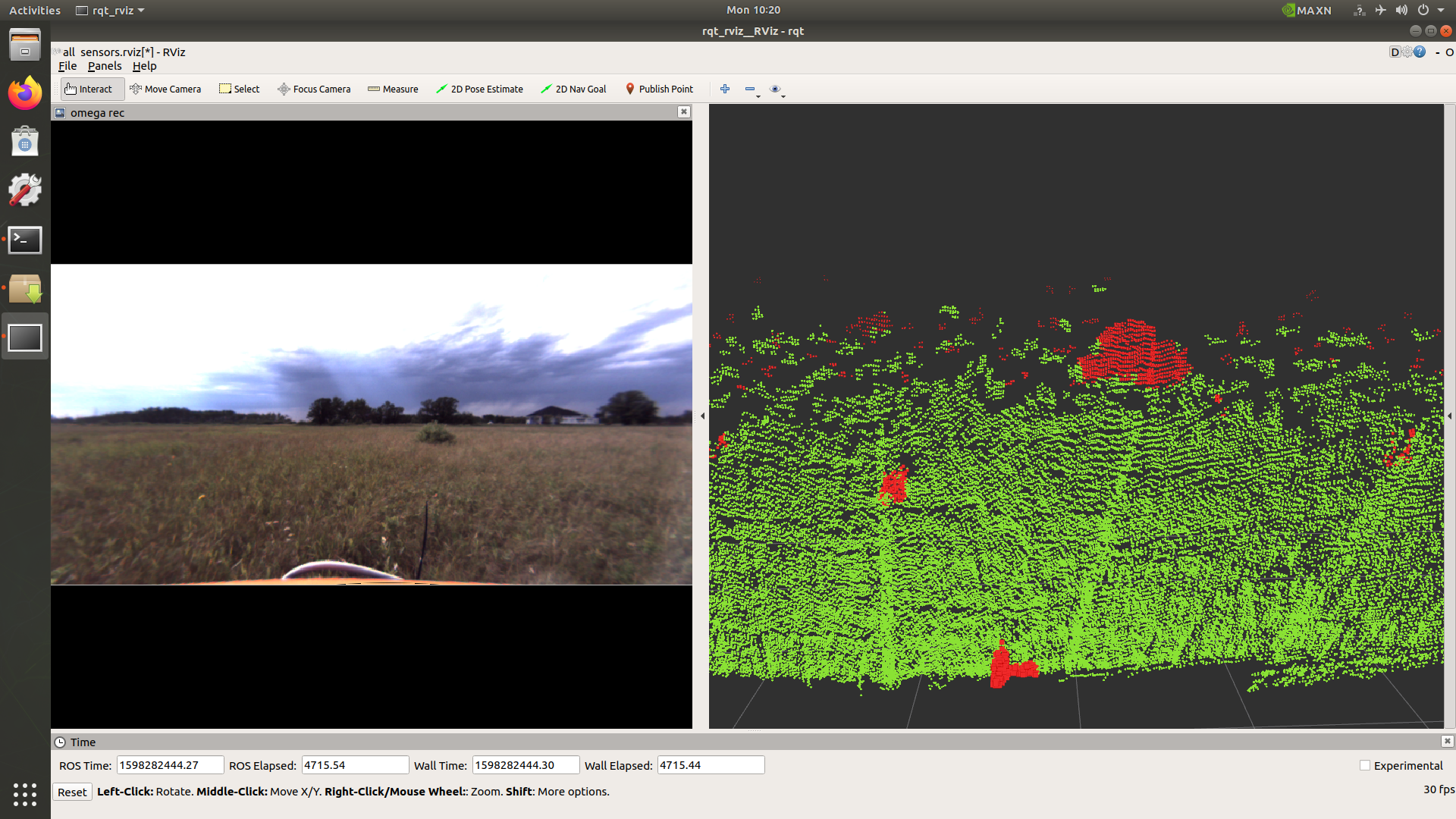

An example of a point cloud from a stereovision camera is in Figure 1. On the left is a normal 2D image from a single camera and on the right is the correlating stereovision point cloud from two cameras. Each pixel in the image is given a range. Due to the nature of the algorithms used for determining range there are some discretization in the point cloud depths.

Figure 1: Stereovision point cloud

Lidar

LiDAR stands for “Light Detection and Ranging”. It works on the principle of time-of-flight and phase shift analysis on eye-safe lasers which illuminate targets and reflect back to sensors. LiDAR is great for medium range distances, they work quickly regardless of the lighting conditions, and they produce accurate representations of the 3D world around them using point clouds. But can be sensitive to particles interference of dust, fog, and rain, and they do not provide rich color information.

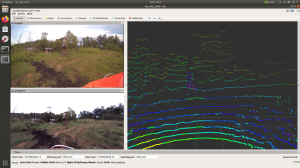

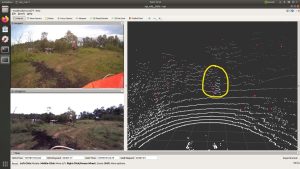

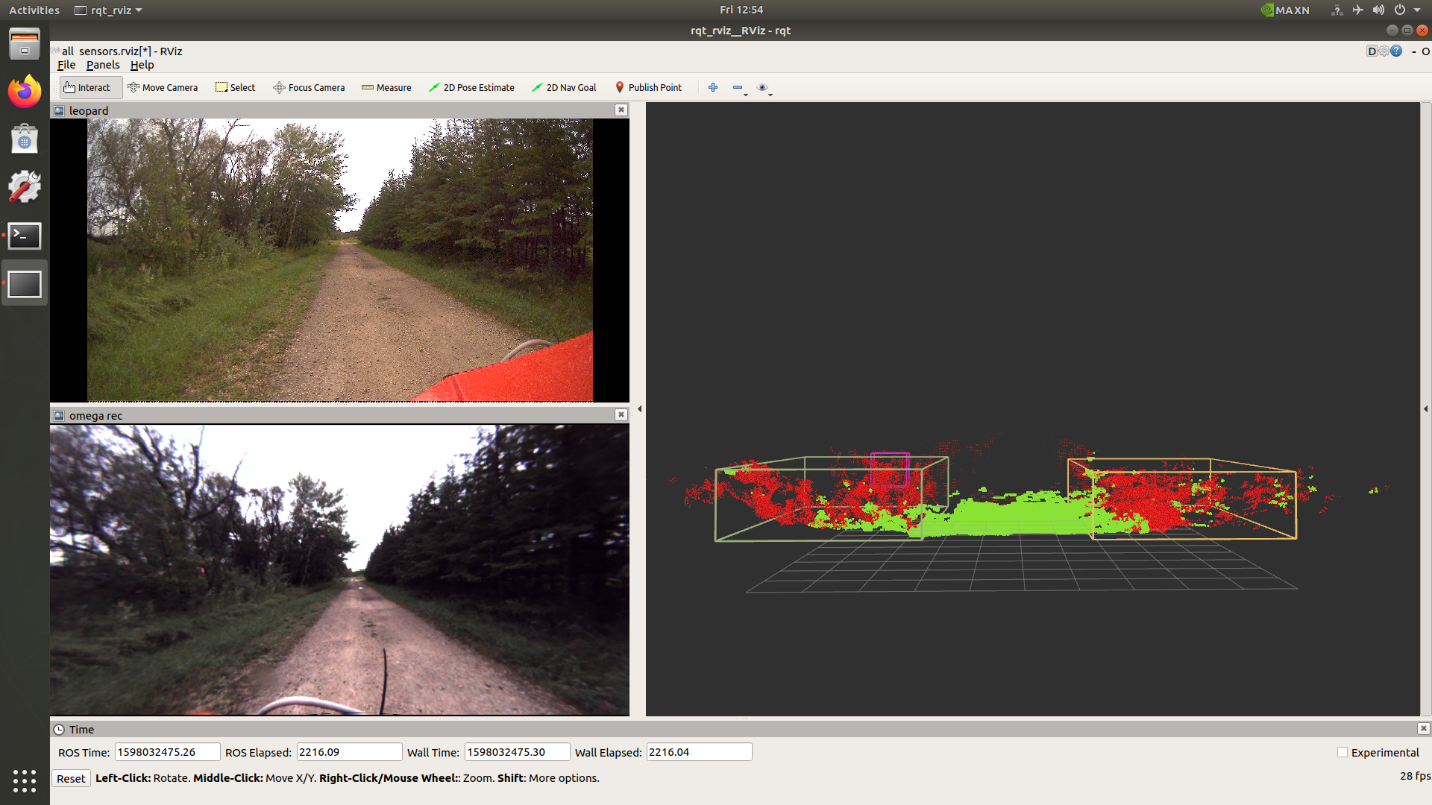

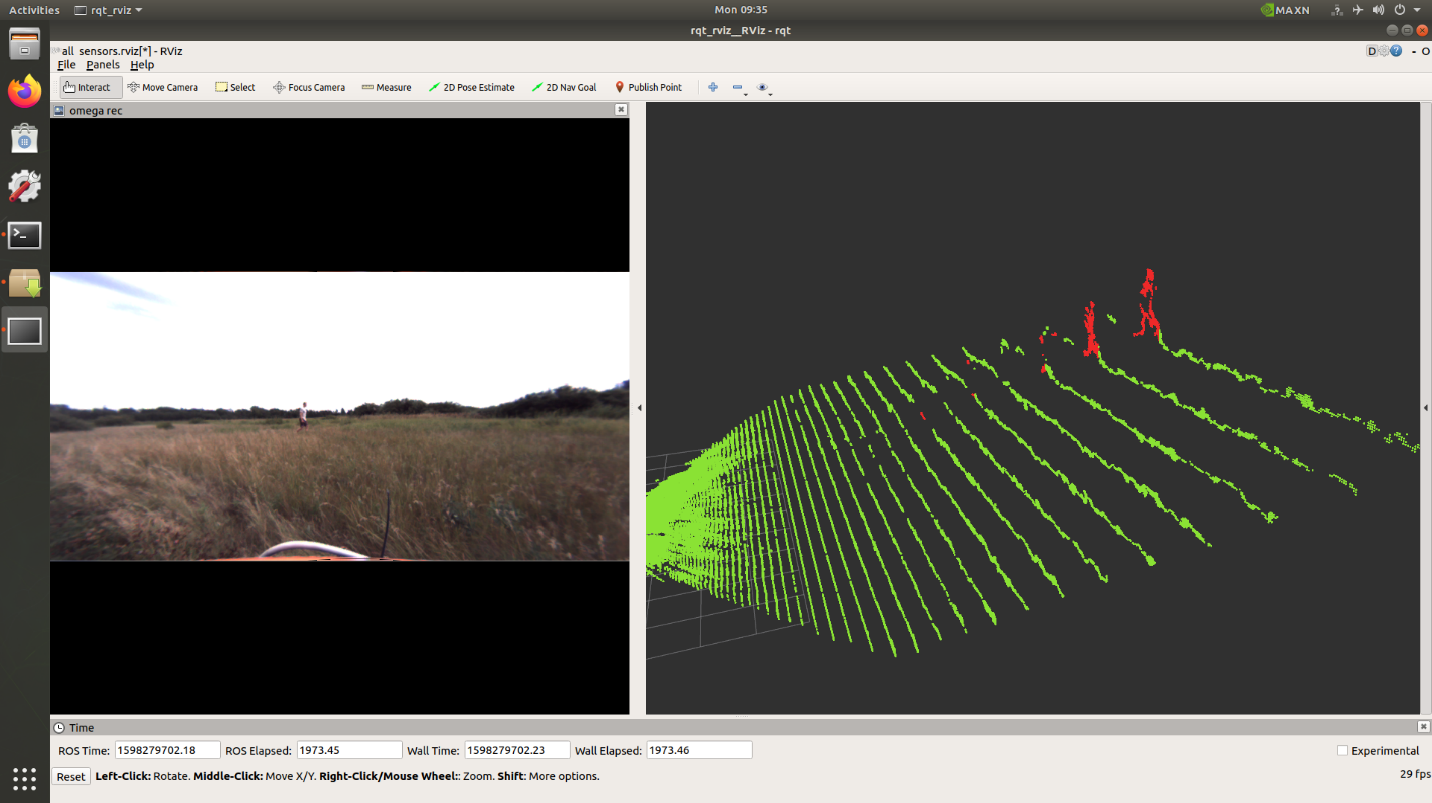

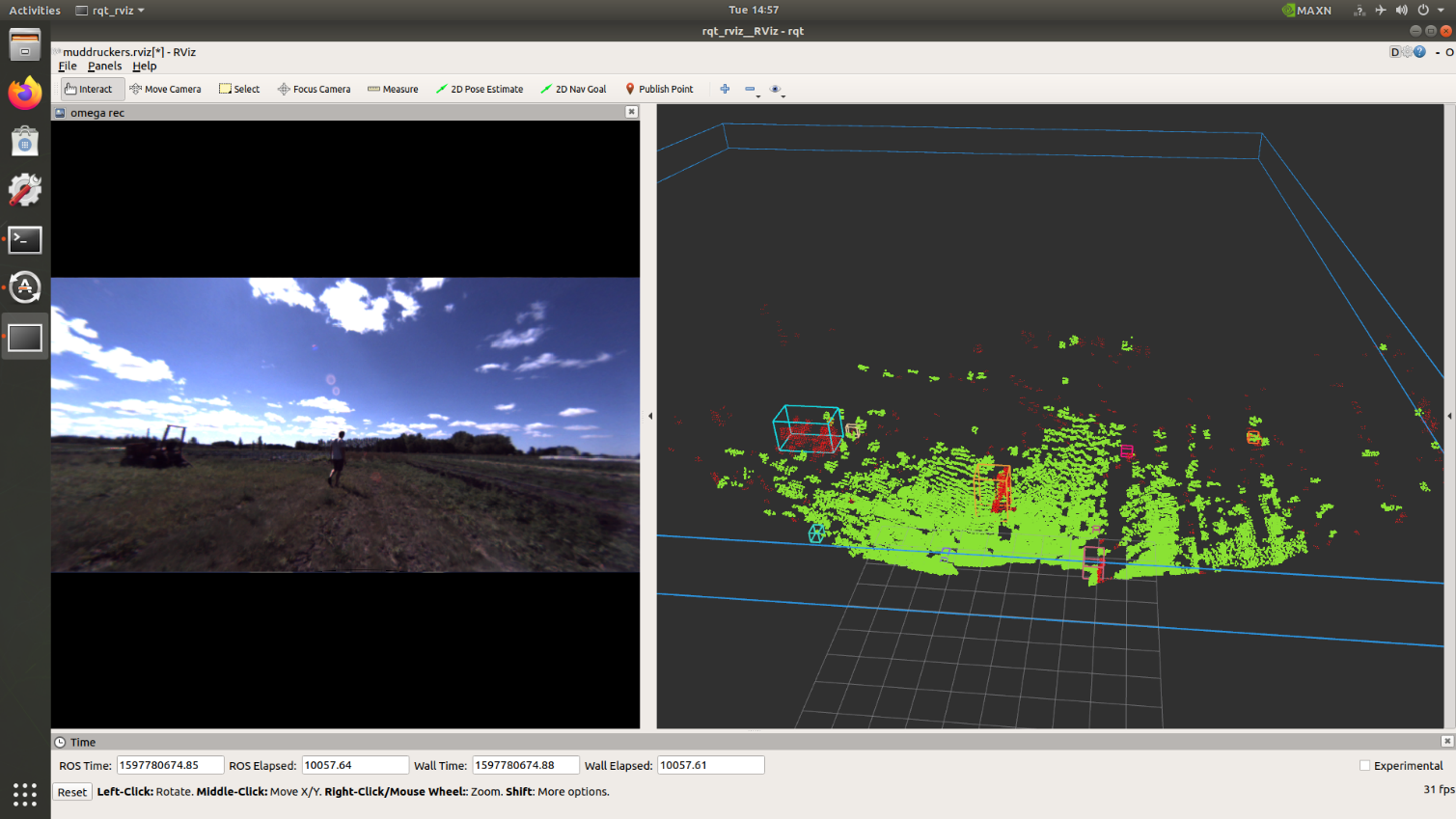

An example of one lidar scan is shown in Figure 2. On the left are images from two different cameras and on the right is a lidar scan. The color indicates the intensity of the received beam. A point cloud from a different lidar is shown in Figure 3.

Figure 2: Lidar A point cloud.

Figure 3: Lidar B point cloud.

Radar

Radar operates on a similar principle to LiDAR but uses a longer wavelength which means less interference from particles and precipitation. Radar is also is invariant to illumination conditions and can detect objects at long range distances. But due to the longer wavelength used the radar points have less resolution. Radar will not give a full picture of the surrounding environment as seen with lidar. Rather it provides a list of detected targets.

An example of radar points overlaid on lidar points is seen in Figure 4. The large pink points are from the radar unit and the white points are from a lidar. Radar accurately detects large obstacles such as bushes and trees also moving objects like people.

Figure 4: Radar overlaid on lidar.

Algorithms

After researching and reading many papers about point cloud processing, image classification, perception, and object detection I found there are three main approaches. Deterministic algorithms which use physical properties of point clouds and rules based on geometry to detect objects. Supervised learning approaches that have been used and proven but require lots of offline hand labelled training data. And there are self-supervised learning methods which are a recent development and do not require hand labelled data but are more complex.

I chose to implement a deterministic algorithm with special considerations for agricultural applications. The benefits of this approach are that it does not require large amounts of hand labelled training data. Training data for agricultural self-driving applications is not openly available right now as it is for urban environments. Thus, the deterministic approach is a good way to begin general perception development.

Once sufficient data is collected and labelled further development can done in supervised learning approaches. Data labelling and annotation is the process of manually identifying classes of objects in sensor input data. Once the data is labelled it can be used to train a machine learning model to quickly identify and classify the objects it was trained to find. This can be done using data from cameras or lidars. The performance of the model depends on the quantity, quality, and diversity of the training data.

Self-supervised learning methods avoid the problem of requiring hand annotated training data by using the output of one sensor to train a model using the input of another sensor. For example, the output of the radar sensor which reliably detects objects could be used to train a machine learning model which uses camera or lidar data. This way the benefits of both sensors can be realized, and no manual annotating process is needed.

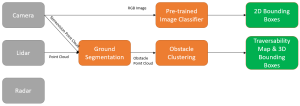

Figure 5 shows the data flow architecture designed for this perception test system. Obstacles are detected and classified in a 2D image by a pretrained supervised learning model. Point clouds from stereovision cameras and lidars follow a different pipeline. The first step in the point cloud pipeline is the deterministic algorithm for ground segmentation which I implemented and optimized using high performance libraries in C++. Ground segmentation is the process of determining what points represent ground which can be safely driven over and what points represent a non-traversable obstacle. The points determined to be obstacles are then clustered into discrete groups or clusters. The clusters can then give a relative volume and position. Information about obstacle location, size, and direction can then be given to a module responsible for driving.

The radar data was collected and analyzed in conjunction with other data sources, but its data was not further processed at this time. The results of the radar were positive and appeared promising for object detection.

Figure 5: Perception research architecture.

Sensor Test Rig

Collecting data in real world application environments was a large and important step in the development of the perception framework. I designed a portable battery powered test rig system which could be easily mounted onto vehicles for data collection. It was comprised of a 1-meter long aluminum mounting bar, custom sheet metal sensor mounts, five sensors, and JCA’s cutting-edge high-performance controller called the Eagle.

Figure 6: Sensor Bar Mounted on test Machine.

The Eagle controller (see Figure 7) is a new ruggedized controller added to JCA’s fleet of mobile computation solutions. The Eagle is the world’s most powerful ruggedized processing platform made for autonomous off-highway machine applications. It features the NVIDIA Jetson Xavier SOM for processing power that provides advanced edge-computing capabilities, dual RTK-GPS for orientation and localization, multiple high-speed (Gigabit ethernet), wireless communication interfaces, cell modem capabilities, up to 8 camera interfaces, 4 CAN/J1939 interfaces, and inputs/outputs made for machine control applications (Click here for the Eagle Product Specs).

Figure 7: JCA Eagle Controller.

The test rig system was taken to two separate locations for data collection. The set ups can be seen in Figure 8 and Figure 9.

Figure 8: Data collection location 1

Figure 9: Data collection location 2.

Initial Results

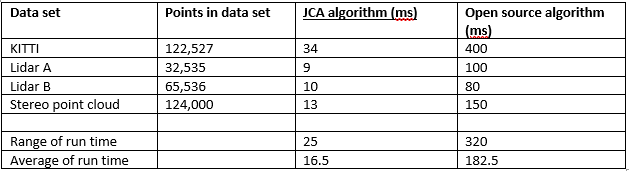

I compared the performance of my algorithm’s implementation to other highly popular well-used open source libraries which performed the same job. It initially was comparable in run time and accuracy to the open source libraries. But after profiling the software and finding some bottle neck areas I was able to optimize the algorithm. Then it ran 90% faster than available libraries with matched or improved accuracy. I tested these algorithms on various data sets from various sources. Each sensor produces point clouds in different ways depending on the operation of the sensor. For example, a 3D spinning lidar sensor will store the points in a different order than a point cloud from a stereovision camera. These kinds of variations affect the run time of the algorithm and should be tested.

The results are summarized in Table 1. The range of the JCA algorithm run time was 25 ms while the open source algorithm varied largely by 320 ms. The average run time of the JCA algorithm was 16.5 ms while the open source algorithm ran in 182.5 ms (91% slower than the JCA algorithm).

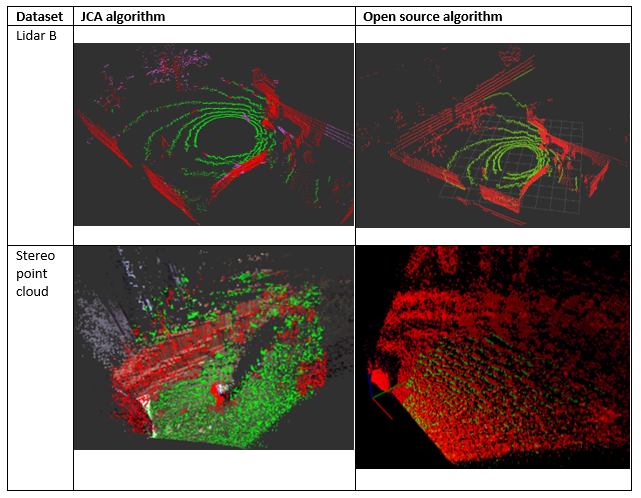

The results can additionally be visualized in Table 2. For a lidar input point cloud, the classification accuracy is very similar – but the JCA algorithm runs 87.5% faster. For a stereovision point cloud, the open source algorithm cannot make sense of the noisy discretized point cloud. It classifies everything as obstacles. Meanwhile, the JCA algorithm is capable of accurately the stereovision point cloud. Also, the JCA algorithm is 91.3% faster than the open source algorithm.

After analyzing these initial results, it was seen that the JCA algorithm which I implemented was robust, very fast, and accurate.

Table 1: Algorithm run time comparison.

Table 2: Algorithm accuracy comparison.

Field Results

After validating the algorithm against alternative algorithms on various datasets, I sought to test it on field data. The field data was collected using the test rig system described earlier.

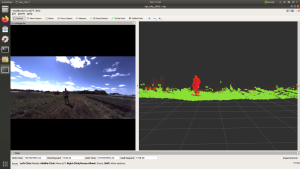

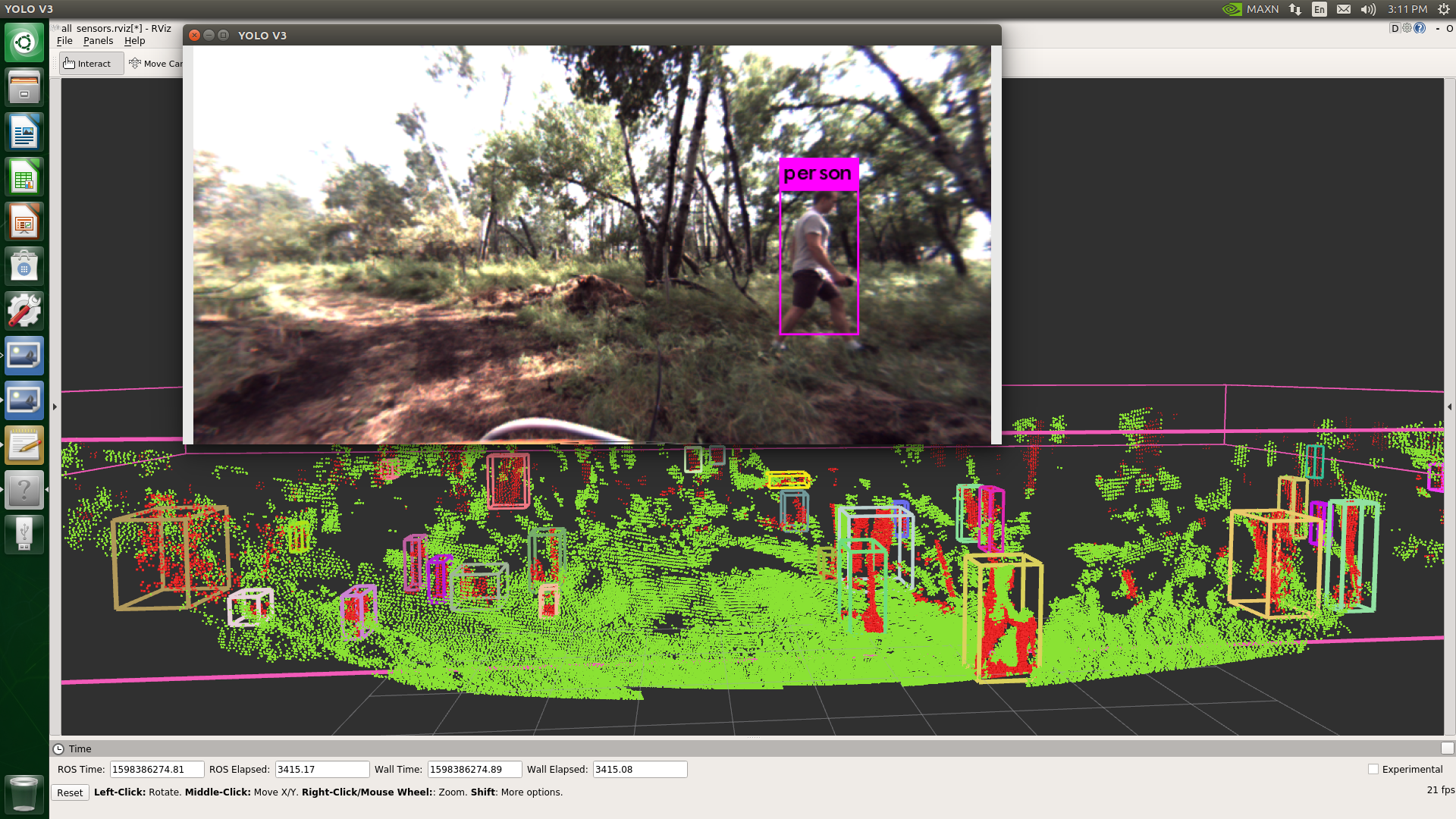

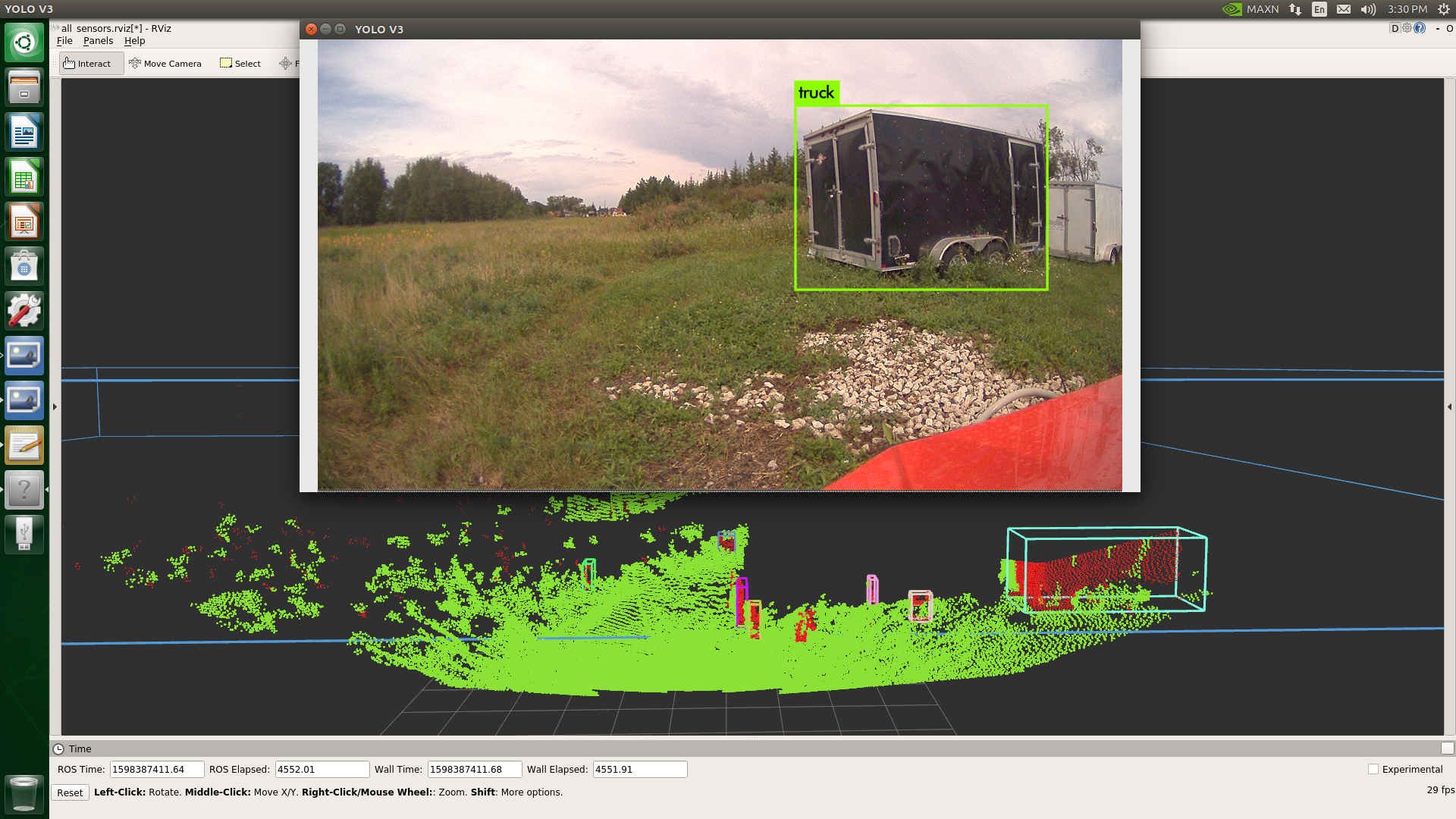

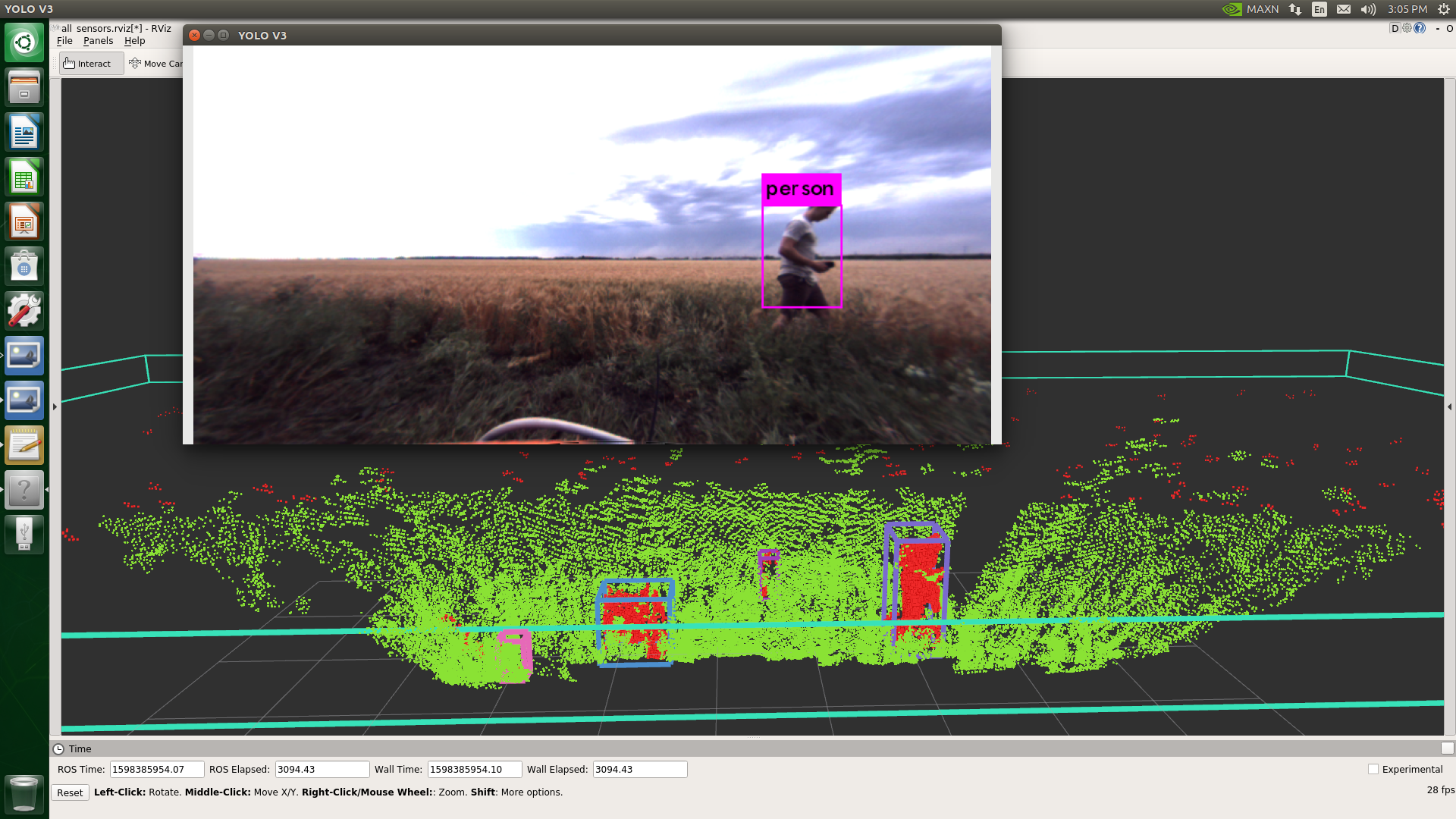

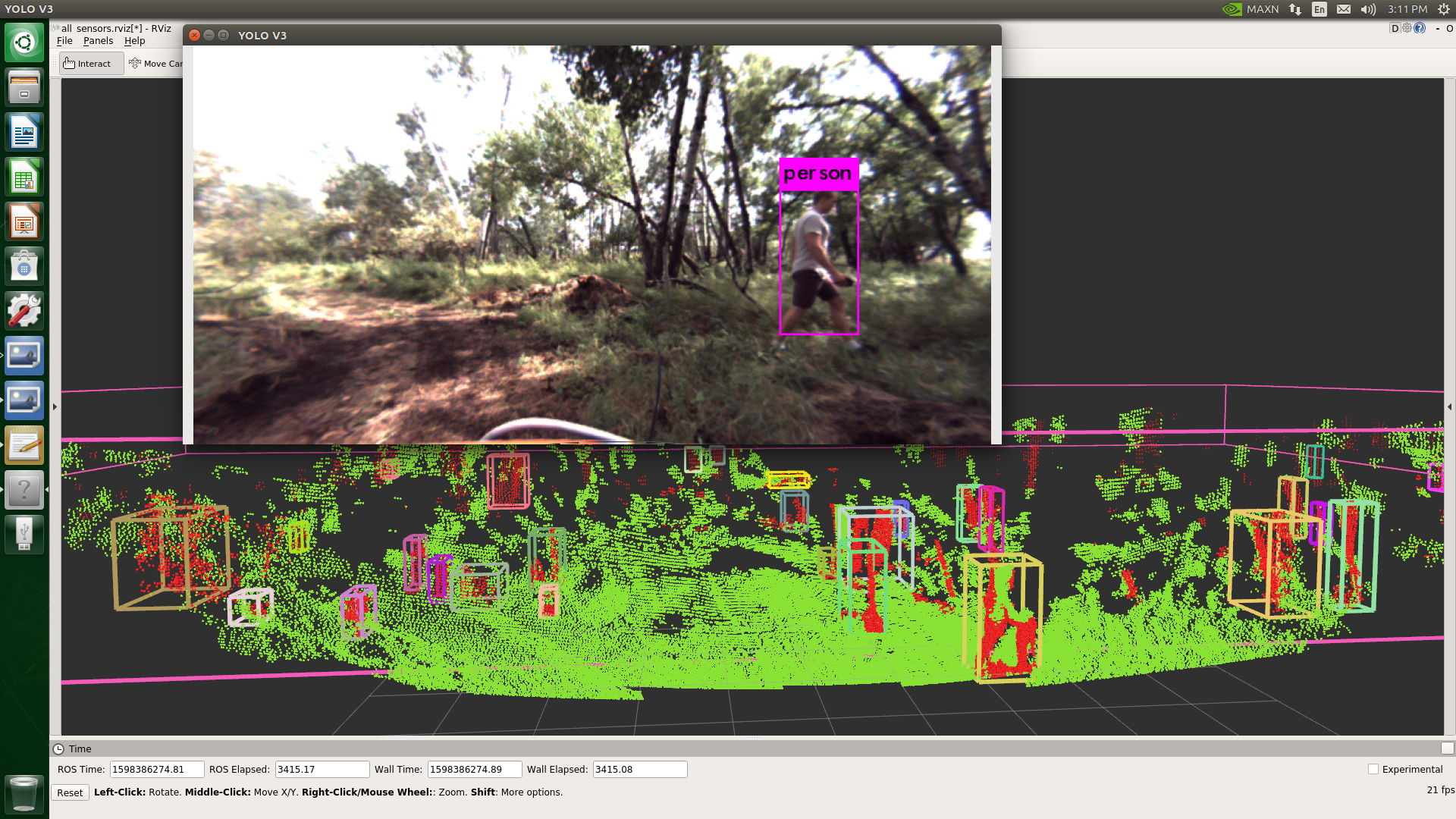

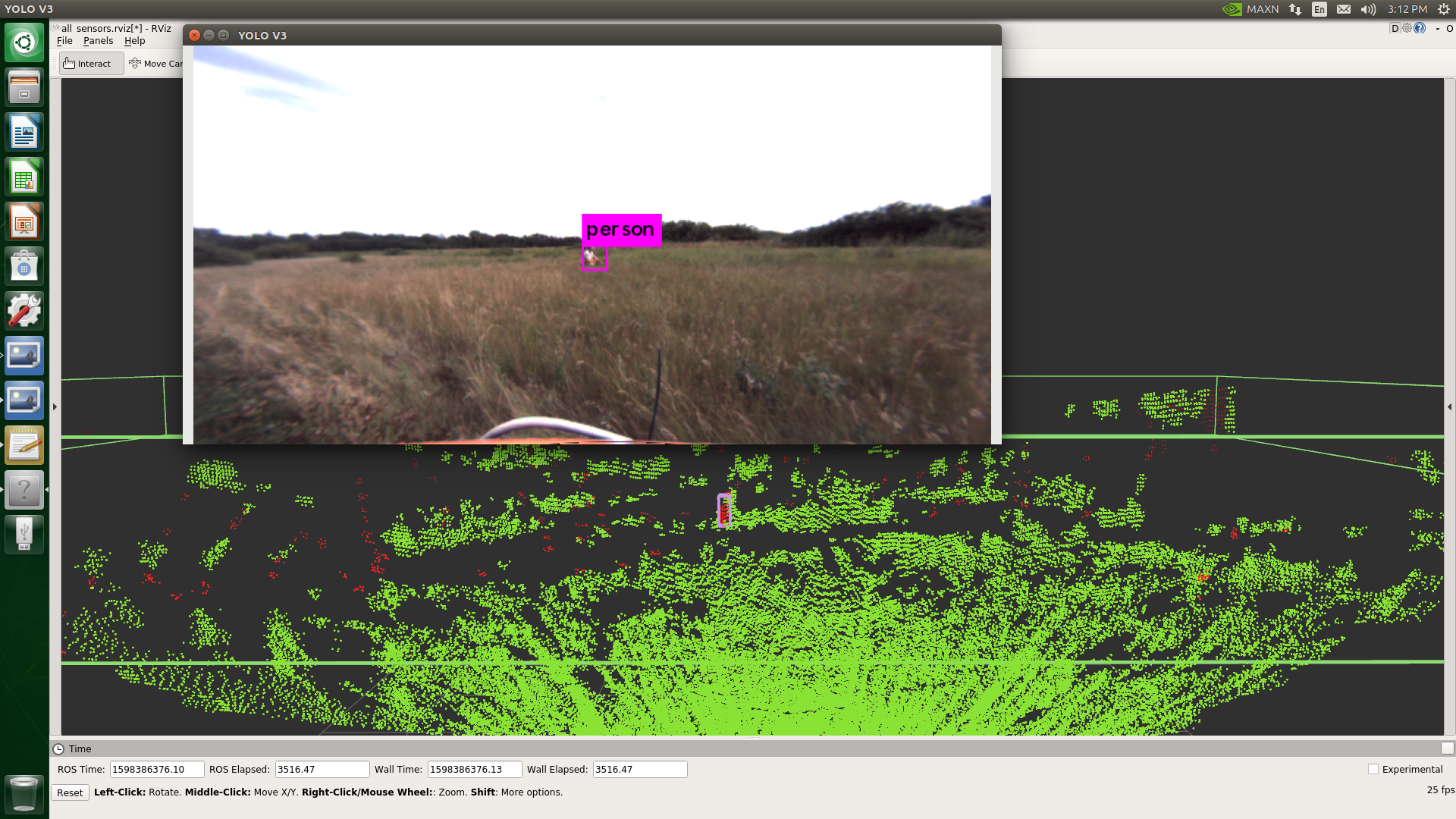

The algorithm was found to still be just as accurate and fast on the live field data as the offline data. I was able to also evaluate clustering algorithms and add a 3D bounding box visualization tool. I additionally evaluated a pre-trained machine learning model which found and classified objects such as person, car, truck, dog, horse, and elephants. Without specifically training the model to find objects of interest, it will only find the objects it has already been trained for.

Figure 10: Person walking through open area.

Figure 11: Truck identified in field area.

Figure 12: Person walking by wheat field.

Figure 13: Person walking in busy forested area.

Figure 14: Person crouching in tall grass.

Figure 15: Bushes identified down gravel road.

Figure 16: Person walking in tall grass field.

Figure 17: Bush detected in tall grass field.

Figure 18: Person and tractor detected in farmer’s field.

Conclusion

This was a very interesting and challenging project to get to work on. At the end of my summer at JCA I have completed a large amount of research into one of the puzzle pieces in the autonomous navigation stack. There are still more technologies and approaches to research, but this provides a promising start. I am excited to see how the autonomous navigation stack will grow in features and capabilities.

I was also able to test the capabilities of the JCA Eagle controller. It proved to be highly reliable, tough, and very powerful. The GPUs in it crushed machine learning tasks in real time speeds and will accelerate object detection libraries. The JCA Eagle controller is critical for the further development of real time perception capabilities for agricultural applications.

Even though I was able to research and test quite a few components, there are still more topics I would like to research in this area. Such as self-supervised learning models, using monocular cameras, and using machine learning classifiers on point cloud data.

Next steps include using the object detection outputs for intelligent dynamic path planning and additional alarm systems for safety. This will allow the autonomous machine to make changes to the path and make emergency stops in unseen circumstances. Taking JCA steps closer towards being leaders in autonomous machinery.

About JCA Electronics

With operations in both Canada and the USA, JCA Electronics provides advanced technology solutions for off-highway mobile machines, primarily in agricultural, construction, and mining industries. JCA Electronics’ engineering and manufacturing operations have been providing customized electrical, electronics, and software systems for OEMs since 2002. For more information see www.jcaelectronics.ca or contact us at info@jcaelectronics.ca.